Stage 7: fold + press

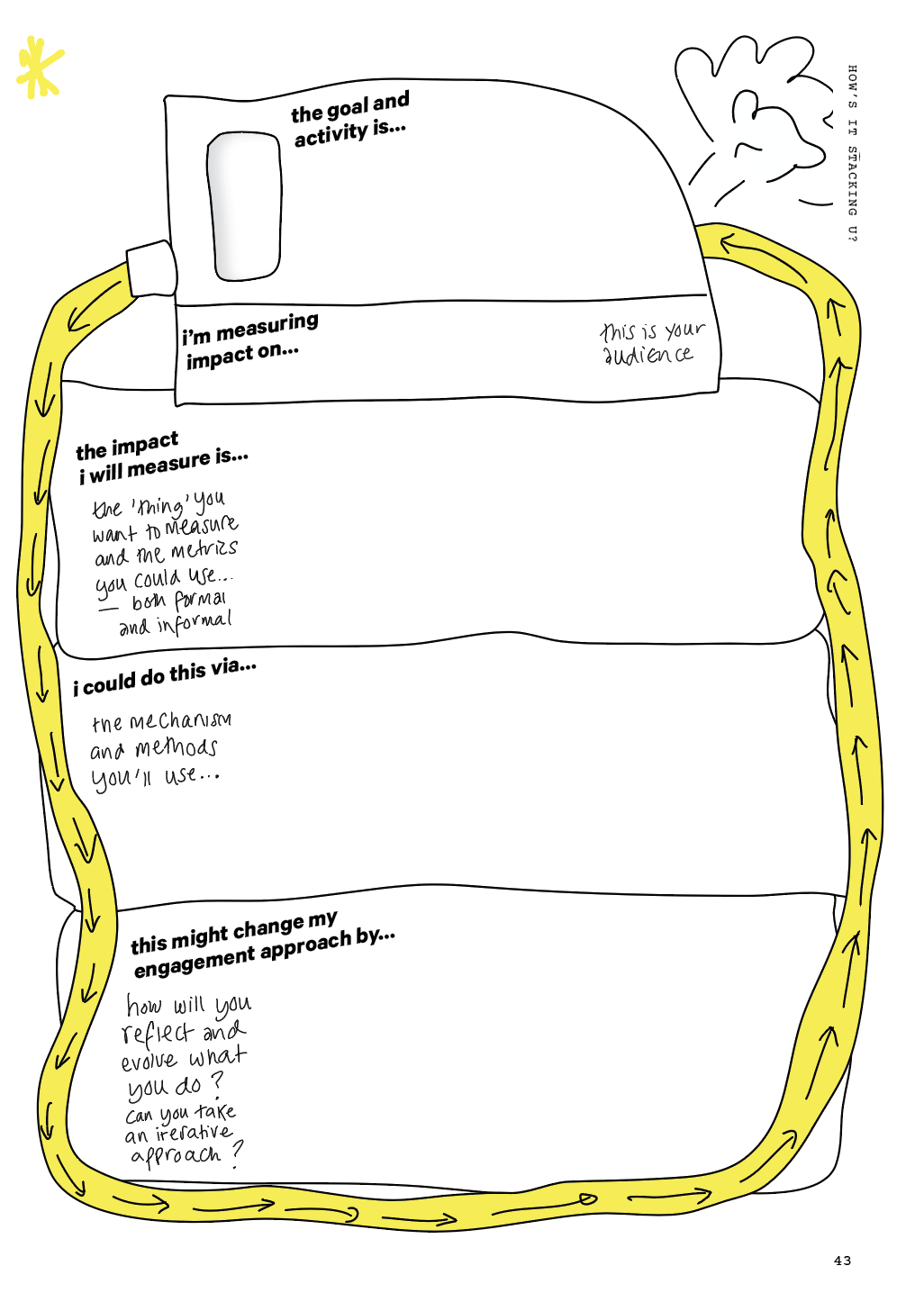

This stage has one step: how’s it stacking up?

We are nearing the end of the cycle! The only thing we have not ironed out is thinking about how one might evaluate the engagement. Or put another way, what would indicate impact? ‘How’s it stacking up?’ helps consider metrics to do that.

What is ‘how’s it stacking up?’

This part of the process asks: what are the measures of success? What metrics are there that you could count, measure or survey, or observe more informally? And how can you evaluate impact both on audiences and on yourself and the other team members?

These metrics might include such things as gaining insight into the concerns people have about science (or specific research areas); making connections between it and everyday life; making more informed decisions using the research area; or other outcomes related to project goals. Some of these metrics may be immediate (for instance if an event was well-received), whereas some of them may need to be measured over the long term.

Stage 5's ‘What’s in the spin?’ audiences and messages will have contained the things that laundromat participants wanted their audience to get from the engagement experience. Consider how these (possibly quite intangible) things could be translated into things that could be measured. Take another look at the wheel, and any expectations from other parties such as funders. Sometimes funders will have specific metrics they want you to use. Will these meet the needs of the scientist-communicators, as well as their funders? Is there a role or need here to bring in a professional or external evaluator?

Why?

It is not uncommon to charge on and do scicomm based on a sense that it is ‘a good thing’. And don’t get us wrong — it usually is (despite suggestions that, for instance, correcting false information can lead to a ‘backfire effect’ where corrective information can entrench the false beliefs further. This isn’t necessarily the case and ‘communicators should not avoid giving corrective information due to backfire concerns’ (Swire-Thompson, et al, 2022)). But as scientist-communicators having some way to quantify the ‘good thing’ is advantageous. It’s what allows us to, in a design sense, iterate and evolve the science communication offering with purpose. And, it helps build evidence to apply for further support.

In addition, encouraging reflection on the impact on scientist-communicators themselves, actively thinking about the impact of undertaking public engagement on their roles as a scientist, researcher and/or science communicator helps flesh out an understanding of personal motivations, some of which might have been specified in the stage 4 wheel. Even simple evaluation can help measure if these motivations are being nourished, and encourage a reflexive check-in in relation to engagement, to make sure it’s sustainable for the person doing the scicomm and not a highway to burnout.

This step does not include training on evaluation, but often this can come from peer discussion. The point is really to get it on the radar and encourage reflection and reflexivity.

Zine workbook page 43 with the How’s it stacking up? template